Quick Summary: This guide gives leaders a practical roadmap to build an AI model that delivers real operational impact. You learn what to clarify before development, how each stage works, mistakes that slow progress, and the true cost of implementation. The insights help you avoid uncertainty and move toward a system that improves accuracy, stability, and daily decisions. If your organisation wants AI that performs reliably, this guide shows the path forward.

When leaders plan to build an AI model, the early stage often brings more friction than expected. Goals sound clear in meetings, yet progress slows once the team starts debating data quality, training methods, and alignment with business outcomes. You want movement, but the path feels unclear, and every delay affects departments that depend on reliable insights.

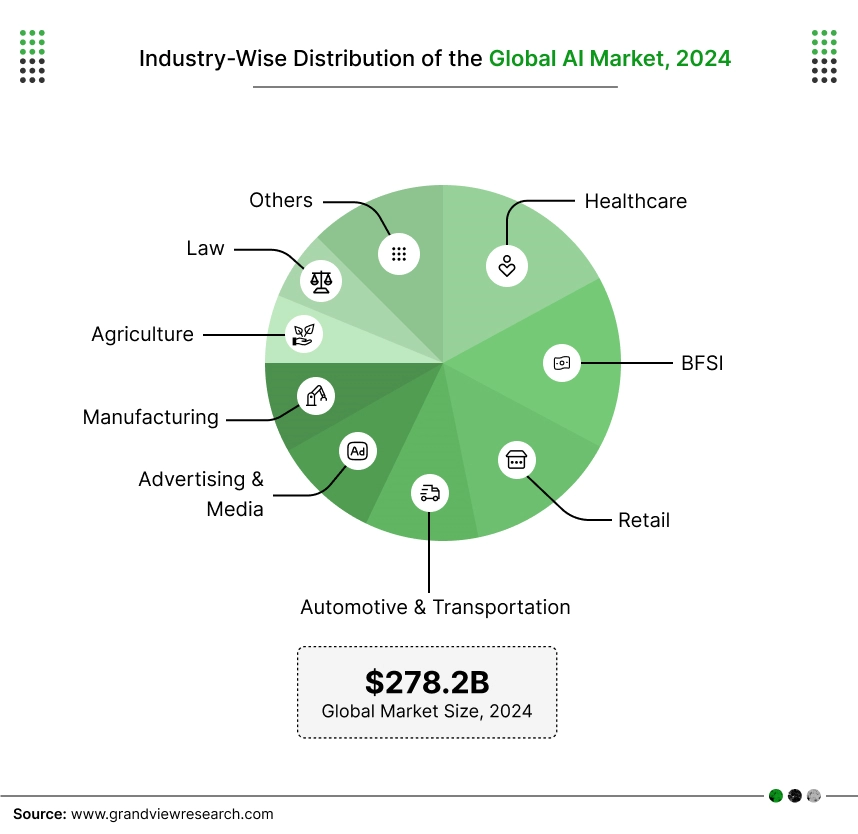

A stable roadmap becomes even more important when you look at the current pace of AI adoption. Recent reports show artificial intelligence reaching USD 279.22 billion in 2024. Forecasts indicate a rise toward USD 3,497.26 billion by 2033 with a CAGR of 31.5 percent from 2025 to 2033.

(source: Grand View Research)

Numbers like these confirm what many executives already sense during boardroom discussions. AI adoption is rising fast, and companies with a solid roadmap stand in a stronger position than the rest.

Many leadership teams now look for a simple path that explains evaluation steps, planning choices, and responsibilities before development begins. With AI trends influencing every major decision, early clarity matters more than ever.

This guide shows what to assess, how to set expectations, and how to begin building an AI model from scratch with confidence.

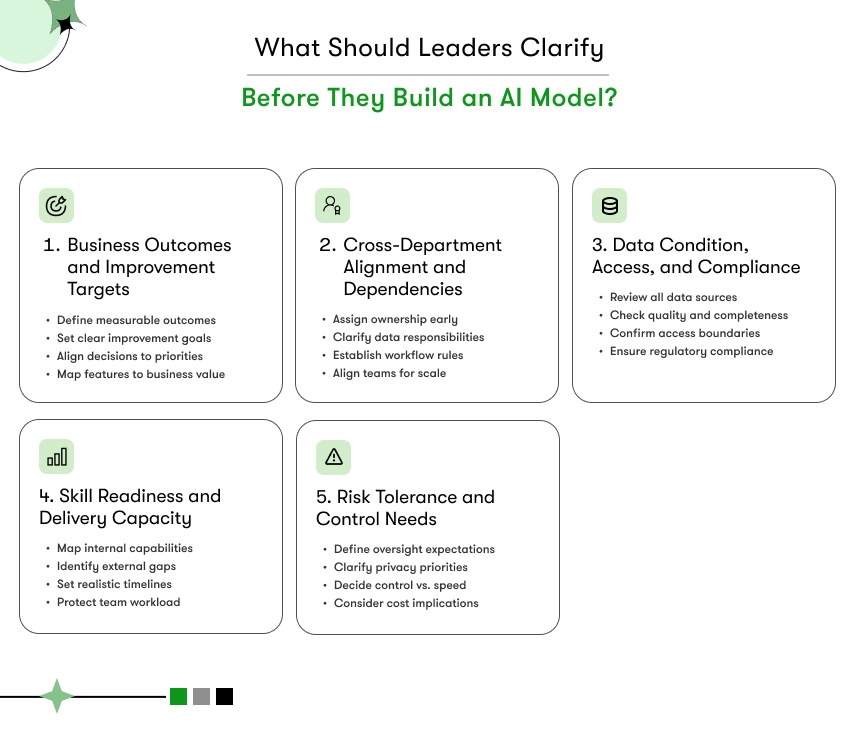

What Should Leaders Clarify Before They Build an AI Model?

Strong direction at the beginning determines how smoothly development progresses. Leadership teams work faster when essential decisions are finalised before any dataset preparation or architecture planning begins.

When foundational elements remain undefined, each department forms a different interpretation of what the system should deliver, which slows coordination and weakens early progress.

Leadership teams protect momentum by establishing priorities, ownership, and expectations at the start of the journey to build an AI model with confidence.

Business Outcomes and Improvement Targets

Clear outcomes guide every technical decision. Leaders gain stronger traction once improvement goals are defined with precision.

- Organisations may plan for sharper forecasting, streamlined workflow activity, or more dependable visibility across critical processes.

- Clear improvement goals help the development team understand the purpose behind every training decision.

- Alignment strengthens because technical contributors can map each feature and data source to a measurable business priority.

Cross-Department Alignment and Operational Dependencies

Multiple departments influence the behaviour of any intelligent system.

- Finance expects reliable numerical signals for planning cycles.

- Operations teams expect stability under real workload pressure.

- Customer-facing teams rely on timely insights to support response quality.

Coordination improves when leadership defines ownership, data support responsibilities, escalation rules, and decision workflows. Clear structure becomes even more important when an organisation prepares how to build an AI model from scratch for a complex environment that also handles varied AI use cases.

Alignment supports steady progress and prevents repeated revisions during early phases.

Data Condition, Accessibility, Compliance, and Ownership

Data environments often determine whether development progresses smoothly or faces repeated delays.

- Leaders review each source of information, including data quality, completeness, access boundaries, and regulatory conditions.

- Compliance requirements in finance, retail, healthcare, and logistics often shape how teams prepare information for training.

- Full visibility into the information landscape helps the development team plan training cycles accurately and avoid interruptions during model preparation.

A grounded understanding of information assets positions the organisation to train without unexpected constraints.

Skill Readiness and Delivery Capacity

Capability mapping helps leadership teams plan support effectively.

- Some responsibilities such as information cleansing or validation can be managed internally.

- Complex engineering work often requires external expertise to maintain reliability throughout training and deployment.

- Leadership prepares realistic timelines by acknowledging internal strengths and identifying areas where a development partner can accelerate progress.

This clarity creates a delivery environment that avoids overstretching internal teams.

Risk Tolerance and Level of Control Needed

Every organisation carries different expectations around oversight, privacy, and long-term ownership.

- Some companies prefer complete control over configuration, training logic, and monitoring behaviour.

- Other companies value faster delivery through a shared-responsibility model with a skilled partner.

- Cost expectations influence these choices, especially when leadership evaluates how much it costs to build an AI model for a regulated or high-volume environment.

Governance preferences shape the path toward a custom build or a guided partnership approach.

Leadership clarity becomes the anchor that shapes development, aligns departments, and protects progress from avoidable friction. Decisions made at the beginning influence timelines, accuracy, and long-term reliability.

Strong direction helps the organisation approach every stage with purpose and prepares the entire environment for a system that contributes real operational value.

How to Build an AI Model From Scratch That Actually Works in Your Organisation

Many teams begin with strong ideas and clear ambition, yet progress slows when leadership tries to build an AI model that supports real operational needs. A dependable outcome emerges through structured choices, careful groundwork, and disciplined delivery.

The following steps outline how to build an AI model in a format that aligns with business goals, strengthens adoption, and supports scalable growth, similar to the planning discipline seen in successful AI app development.

1. Start With One Precise Use Case

Do not begin with a broad vision. Begin with one sharp problem that affects real work.

- Choose a use case that has clear input and output, such as lead scoring, demand forecasting, ticket triage, quality checks, or risk flags.

- Confirm that the problem appears often enough to justify the effort and that teams feel the pain every week.

- Define a simple success statement, such as reduced handling time, fewer mistakes, or better prediction accuracy.

- Align this use case with revenue, cost, risk, or customer experience so the outcome is easy to measure.

2. Map the Data You Already Have

Most projects fail because data reality does not match assumptions. Before any model design, understand what information exists today.

- List all systems that hold relevant information such as CRM, ERP, ticketing tools, spreadsheets, or logs.

- For each source, note who owns access, what time range it covers, and how frequently it updates.

- Take a small sample and review columns, missing values, duplicates, and obvious errors.

- Decide which sources are essential for the first version and which can wait for future iterations.

This work gives a grounded base for building an AI model from scratch without surprises during training.

3. Design the Data Pipeline Before the Model

A model will only behave well if the information feeding it is reliable and flows in a repeatable way.

- Define how raw records move from source systems into a clean, structured format ready for training.

- Decide which fields must be cleaned, normalised, or encoded and document those rules clearly so they can be repeated.

- Separate data into training, validation, and test sets while keeping real-world distributions intact.

- Plan how the same pipeline will run after deployment so production data matches training data as closely as possible.

This step matters as much as any algorithm choice when teams build and train an AI model for production use.

4. Choose the Simplest Model That Can Succeed

Complex architecture is not a badge of quality. The correct choice is the simplest design that meets the accuracy and reliability target.

- Begin with well-known approaches such as gradient boosting, logistic regression, or basic neural networks before considering heavy architectures.

- Match the model family to the type of data. Text, tabular data, time series, and images each benefit from different techniques.

- Review the skills available in your team and choose a design that they can understand, maintain, and explain.

- Document why this approach fits the use case so future team members understand the reasoning.

5. Run Iterative Training and Honest Evaluation

Training should be a series of careful experiments, not a single pass. Evaluation must reflect real work, not just statistics.

- Define clear metrics such as precision, recall, F1 score, error rate, or business KPIs that matter for the chosen use case.

- Run multiple training cycles, change one factor at a time, and record the outcome so progress is traceable.

- Use a validation set that reflects the real environment rather than idealised examples.

- Review results with subject matter experts who handle the process daily and adjust based on their feedback.

6. Test the Model Inside a Safe, Realistic Pilot

Do not push the model across the entire organisation on day one. Run a focused pilot with real users and real volume.

- Select one branch, region, or team and introduce the model as a decision support tool before making it fully automatic.

- Track accuracy, response time, error patterns, and user feedback over a defined pilot window.

- Log every prediction and outcome so you can investigate cases where the model failed or behaved unexpectedly.

- Adjust thresholds, features, or logic based on what the pilot reveals, then repeat a shorter pilot if needed.

7. Plan Ownership, Monitoring, and Budget From Day One

A working solution needs clear ownership and a realistic view of ongoing effort. Many executives ask how much does it cost to build an AI model, but the better question is how to sustain it.

- Assign a specific team as the long-term owner for behaviour, monitoring, and improvements.

- Set up dashboards that track prediction quality, drift in data, and key business KPIs tied to the use case.

- Reserve part of the budget and calendar for periodic retraining and updates as conditions change.

- Define a change process for model updates so operations, product, and compliance teams stay informed.

8. Formalise the Playbook for the Next Use Case

Once one use case works, the real advantage appears when that experience becomes a repeatable playbook.

- Document every phase: use case selection, data mapping, pipeline design, model choice, training, pilot, and rollout.

- Capture lessons that save time or avoid mistakes, and convert those lessons into standards for future projects.

- Reuse components such as data connectors, monitoring scripts, and evaluation templates.

- Use this playbook as the base for how to build an AI model from scratch for the next business problem with less friction and lower risk.

A structured roadmap gives leadership confidence at every phase of the journey. Each step moves with intention, each cycle adds stability, and each improvement brings the organisation closer to dependable outcomes from advanced systems.

This approach also strengthens the ROI of AI apps because progress becomes predictable instead of experimental. A method like this turns AI development into a repeatable capability that supports long-term growth.

When Should a Company Build an AI Model From Scratch?

Executives eventually reach a point where an existing tool no longer supports operational goals. Many organisations face workflow gaps, limited customisation options, or restricted access to configuration settings.

Leadership teams seek deeper control, stronger alignment with internal rules, and behaviour shaped around company priorities instead of generic patterns. A clear need for a custom path becomes visible once existing systems fail to support strategic direction.

Situations Where Off-the-Shelf Tools Limit Progress

Generalised platforms provide convenience during early stages, yet specialised environments often demand a level of precision that broad solutions cannot deliver.

- Ready-made tools often follow wide-ranging logic, which limits the ability to support company-specific decision pathways.

- Operational teams experience time loss when internal workflows must be adjusted to fit general-purpose tools.

- High-precision industries rely on accuracy levels that standard solutions fail to maintain consistently.

- Limitations across compliance, custom rules, and behavioural tuning encourage organisations to consider building an AI model from scratch to achieve performance aligned with internal expectations.

A custom build becomes necessary when operational depth exceeds the capacity of a generalised system.

Data Complexity That Requires an Adaptive System

Many organisations operate with layered, dynamic, or multi-source information that demands advanced interpretation.

- Domain-specific patterns frequently disappear when processed through frameworks that were never designed for specialised inputs.

- Unstructured information requires logic that understands context, which many pre-built systems struggle to support.

- Custom development allows engineering teams to design learning paths that reflect operational realities instead of forcing information to match rigid templates.

- Leadership teams seeking a learning environment built around internal patterns often evaluate how to build an AI model from scratch to achieve long-term adaptability.

Adaptive architectures unlock value in environments where information complexity remains high.

When Control, Privacy, and Ownership Become Strategic Priorities

Many companies reach a scale where deeper influence over behaviour, privacy, and refinement processes becomes essential.

- Sensitive departments require full oversight to protect internal information at every stage of development.

- Custom development creates opportunities to refine behaviour, training logic, and performance settings without waiting for release cycles controlled by external providers.

- Intellectual property ownership becomes valuable once AI output contributes directly to strategic or operational advantage.

- Long-term initiatives gain stability when leadership teams guide evolution independently instead of relying on vendor schedules.

A customised system becomes the preferred direction when privacy, control, and ownership shape competitive advantage.

How to Evaluate Long-Term ROI for Custom Models

A custom build becomes a strong investment when long-term returns outweigh the limitations of general-purpose tools.

- Operational leaders calculate ROI by reviewing improvements in insight quality, reduced manual effort, and stronger decision cycles.

- Custom models eliminate restrictions connected to licensing rules, usage caps, or external approval cycles.

- Tailored solutions continue to improve when trained on meaningful internal information rather than large, generic datasets.

- Value increases as the system adapts to internal priorities and contributes to accuracy, stability, and strategic growth.

A custom approach supports organisations seeking behaviour shaped around daily realities, full ownership, and accuracy aligned with long-term objectives.

What Does a High-Performing AI Model Look Like in Real Operations?

Senior leaders evaluate success by observing behaviour inside real workflows rather than controlled testing environments. A dependable system supports decisions with consistency, adapts to everyday operational changes, and delivers measurable outcomes that matter across departments.

Companies preparing to build an AI model for enterprise use often rely on these performance indicators to confirm that the system is capable of supporting meaningful work at scale.

How Stable Models Reduce Delays and Strengthen Forecasting

Operational stability becomes the first sign of maturity. A reliable system maintains consistent behaviour even when data volume increases or conditions shift during the day.

- Consistency reduces unnecessary delays because teams avoid repeated checks, manual adjustments, and accuracy corrections.

- Forecasting quality improves when patterns are interpreted correctly across short-term and long-range periods.

- Staff gain confidence when predictions remain dependable under varied workload levels.

- Stability supports planning cycles, supply allocation decisions, and resource management with fewer interruptions.

Role of Automation in Productivity Improvements

Automation creates meaningful impact when support flows smoothly into daily routines instead of generating new friction.

- Repetitive work decreases because classification, routing, early-stage analysis, and recommendation tasks move automatically.

- Specialists encounter fewer interruptions, which strengthens focus on responsibilities requiring judgment and expertise.

- Productivity increases as routine processing shifts to automated flows.

- Throughput improves across departments, especially during weeks with fluctuating workloads.

Impact on Customer Experience, Risk Control, and Daily Operations

High-performing models influence how customers and internal users experience each interaction.

- Response times drop as decisions receive support through accurate, near-instant analysis.

- Error reduction improves service consistency because behaviour follows rules aligned with company expectations.

- Risk exposure decreases when unusual patterns are detected early and brought to the attention of responsible teams.

- Operational handoffs become smoother, which reduces bottlenecks and strengthens continuity across functions.

How Executives Measure Success After Deployment

Successful deployment becomes meaningful only when senior teams can track progress using practical metrics. These indicators help confirm whether the organisation’s investment is contributing to real outcomes.

- Accuracy and stability trends reveal whether behaviour remains dependable in varied conditions.

- Operational improvements appear when staff time shifts from corrective work to strategic execution.

- Financial gains show up through lower waste, better utilisation, and more efficient resource consumption.

- Strategic value becomes evident when the system supports expansion, scalability, and stronger decision cycles.

Responsiveness That Supports Daily Decisions

Timely output influences how much value a system contributes during fast-moving operations. A model that responds slowly becomes a barrier, while a responsive model strengthens decision cycles.

- Rapid responses help teams act without hesitation.

- Real-time or near-real-time output prevents delays during high-priority decisions.

- Quick feedback supports high-risk tasks where timing plays a major role.

- Departments operate more smoothly when insights arrive at the moment they are needed.

Improvement Based on Real Experience

A high-performing model becomes more valuable as it adapts to the organisation’s environment. This improvement strengthens accuracy and stability over time.

- Behaviour refines continuously as new information becomes available.

- Updates maintain reliability instead of introducing uncertainty.

- Learning paths shaped by internal patterns strengthen accuracy across complex tasks.

- Teams benefit from a dependable system that grows alongside business needs.

Operational Gains That Justify the Investment

Executives measure success by the outcomes the system supports once deployed. These outcomes confirm whether the investment was worthwhile.

- Manual effort reduces as workflows shift toward automated execution.

- Lower error frequency decreases waste and strengthens service consistency.

- Broader visibility across functions supports stronger planning and more confident decisions.

- Customer experiences improve through faster, more accurate responses at every touchpoint.

A system that consistently delivers these results is considered ready for enterprise-scale use. These qualities help organisations plan how to build an AI model that performs with the same level of confidence in their environment.

Now, let’s see how an AI model works in real life through a practical example.

How an AI Model Works in Real Operations: A Simple Story

Mark, an operations manager from Texas, managed daily forecasting for a distribution company. Unpredictable order spikes and constant workload pressure created stress across his entire planning cycle. One focused initiative helped the organisation move toward a more stable workflow.

How Mark Started

- Mark selected one precise use case. He planned to build an AI model for next day order forecasting to support staff planning and route allocation.

- His team gathered six months of order data, reviewed every record, removed duplicates, and corrected errors.

- Order information was grouped by time slot, delivery zone, and order type to form a dependable training foundation for building an AI model from scratch.

How the First Version Was Built

- A lightweight forecasting model was created to build and train an AI model with steady, structured data.

- Evaluation took place inside one branch through a controlled pilot, helping planners experience early benefits and share feedback.

- Feedback shaped improvements and prepared the model for a broader rollout.

What Changed After Deployment

- Forecasting predictions improved peak hour planning, allowing teams to allocate staff and vehicles before workload increased.

- Missed timelines dropped due to early action in high pressure windows.

- Overtime reduced because planners relied on consistent signal patterns instead of guesswork.

How the Organisation Grew From One Project

- Leadership expanded the forecasting solution across additional branches after observing stronger performance.

- Mark’s team later reused the same structure to create a second solution focused on delivery delay alerts and how to build an AI model from scratch faster.

- Every new initiative moved with less friction because data preparation, training steps, pilot design, and refinement methods were already documented.

This story shows how structured decisions, steady preparation, and focused execution can turn complex goals into reliable business results. One practical win builds confidence across leadership and creates a repeatable path for future AI initiatives, especially when supported by disciplined AI Development Services that guide each stage with clarity.

How Much Does It Cost to Build an AI Model?

Enterprise buyers evaluate cost early because budget planning influences strategy, scope, and timelines. Companies planning to build an AI model can use the ranges below as a realistic reference for typical investments seen across industries.

The table summarises real, widely-used pricing benchmarks for different types of AI models.

AI Model Development Cost Breakdown

Companies evaluate several categories when estimating the investment needed to build an AI model. The summary below reflects actual enterprise pricing patterns used by consulting firms and large technology teams across finance, healthcare, retail, and manufacturing.

| Model Type | Complexity Level | Typical Use Cases | Realistic Cost Range (USD) | Notes |

| Basic AI Model | Low | Simple classification, rule-based prediction, early-stage scoring | 40,000 – 60,000 | Suitable for simple automation or structured environments. |

| Mid-Complexity Model | Medium | Forecasting, scoring, structured analytics, customer insights | 60,000 – 180,000 | Requires multiple data sources and deeper analysis logic. |

| Advanced AI Model | High | Vision systems, NLP, anomaly detection, multi-layer patterns | 180,000 – 2,20,000 | Needs specialised engineering, large datasets, and strong compute. |

| Enterprise-Scale System | Very High | End-to-end intelligence, automation across functions | 2,50,000 – 3,50,000 | Includes pipelines, continuous training, monitoring, and governance. |

Primary Cost Drivers That Influence Total Investment

Each AI initiative carries different demands based on workflows, data quality, and operational requirements. The table outlines how these factors shape the final budget in real enterprise scenarios.

| Cost Driver | Impact on Budget | Enterprise Reality |

| Data Preparation | Medium–High | Cleaning, labelling, and integration often consume 40–60 percent of project effort. |

| Infrastructure & Compute | Medium | Cloud training, GPUs, orchestration, and storage add 10–30 percent to the estimate. |

| Engineering & Architecture | High | Custom behaviour requires skilled talent and longer development cycles. |

| Training Cycles | Medium–High | More iterations increase compute hours and validation work. |

| Compliance & Security | Medium | Required for healthcare, finance, retail, and logistics environments. |

| Maintenance | Medium | Annual upkeep and tuning usually represent 15–25 percent of initial cost. |

Training Cycles and Their Impact on Budget

Training contributes significantly to overall cost, especially when models need frequent refinement or operate in high-volume environments. The table summarises how training variables influence investment.

| Training Factor | Cost Influence | Explanation |

| Size of Dataset | Higher when large or unstructured | More data increases storage, processing, and iteration time. |

| Training Iterations | Higher with each cycle | Complex models often require extended training to reach stable performance. |

| Validation & Monitoring | Adds measurable effort | Required to ensure accuracy stays consistent in real operations. |

| Updates Over Time | Recurring cost | Needed to protect performance and manage drift. |

Cost Benefits of Clean Data and Structured Foundations

Companies reduce long-term expenses when data stays consistent and development follows a disciplined approach. These practices also influence the overall AI app development cost because cleaner inputs and structured processes prevent unnecessary rework. The table below outlines the operational advantages that support cost efficiency.

| Advantage | Why It Reduces Cost |

| Clean inputs reduce engineering time | Developers focus on refinement instead of repairing inconsistencies. |

| Lower compute usage | Better data shortens training cycles and cloud consumption. |

| Faster deployment | High-quality inputs accelerate integration and evaluation. |

| Reduced maintenance cost | Stable information lowers drift and improves accuracy over time. |

Companies control cost effectively when the project starts with a defined scope, a realistic dataset, and practical expectations. A budget of $60,000 to $3,50,000 is enough to build an AI model that performs well inside real operations.

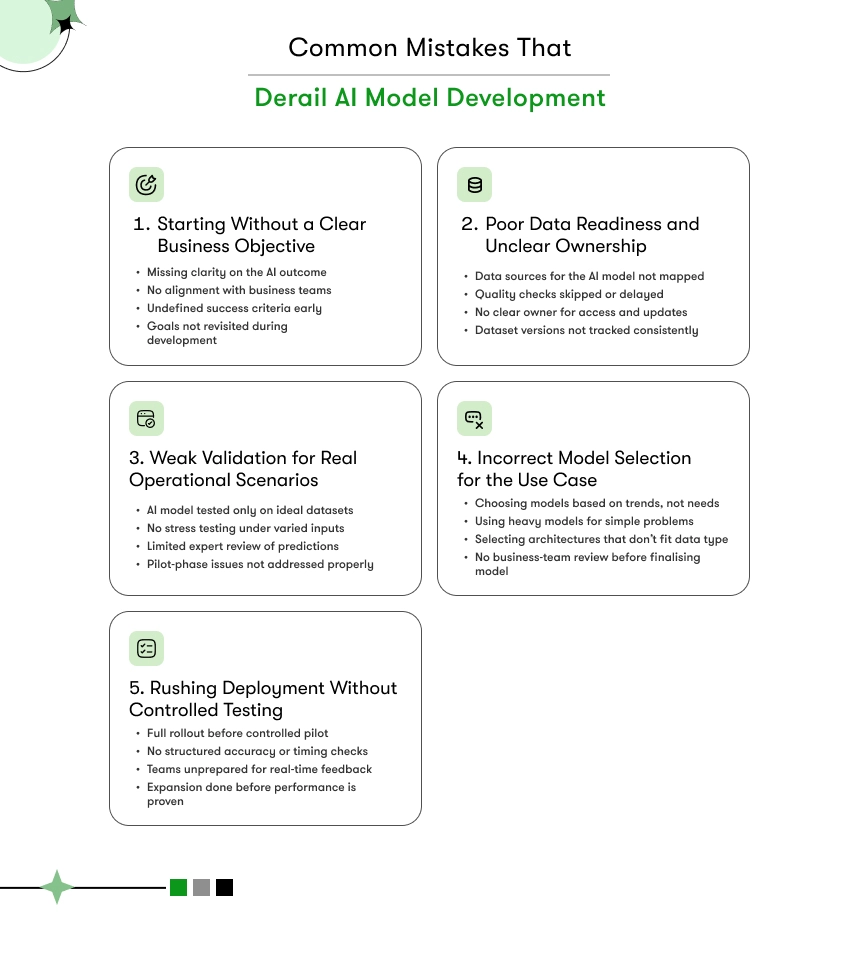

Common Mistakes That Derail AI Development and How Teams Avoid Them

AI projects fail more often due to planning issues than technical complexity. Companies that plan to build an AI model gain stronger results when they avoid the following common mistakes.

Each point below includes actionable steps organisations can apply immediately, even before development begins.

Starting Without a Clear Business Objective

Projects lose direction when teams focus on technology instead of outcomes. A clear objective guides the entire development path and prevents confusion later.

- Define a clear operational result such as reduced processing time, lower error rates, better forecasting, or stronger service consistency.

- Confirm the benefit with the departments that will depend on the model.

- Document the decision path so every contributor understands what the model must achieve.

- Review the objective during development to ensure the project is still aligned with real business needs.

Poor Data Readiness and Unclear Ownership

Data issues are the most common reason projects stall. Even a strong model cannot work well without dependable information.

- Identify every source of data needed for training and confirm who controls each source.

- Check the quality early to avoid delays during training.

- Assign ownership to a specific team so access, updates, and permissions stay organised.

- Keep a versioned dataset to track changes and prevent confusion during evaluation.

Incorrect Model Selection

Some teams select a model based on trend or complexity rather than actual need. The wrong model leads to high cost, low accuracy, and repeated rework.

- Select a model that fits the real structure of your data instead of choosing advanced architecture without purpose.

- If the requirement is small, choose a lightweight model to reduce cost and training time.

- If the organisation relies on unstructured information, choose an approach that supports that format without forcing transformations.

- Review each option with business teams so the model matches the environment where it will operate.

Weak Validation Approach

A model that looks accurate in testing may behave differently during daily operations. Strong validation prevents failure after deployment.

- Test predictions using real departmental scenarios instead of isolated test sets.

- Validate the model under different input conditions to check stability.

- Compare output with decisions made by subject matter experts to ensure alignment with real expectations.

- Track errors and feedback during pilot phases and refine the model before rollout.

Rushing Deployment Without Controlled Testing

Deployment without a controlled environment creates operational interruptions. Companies gain stronger outcomes when they release the system in stages.

- Introduce the model as a limited pilot inside one department before expanding.

- Monitor performance with a structured checklist that tracks accuracy, timing, and consistency.

- Provide staff with clear instructions so feedback reaches the AI team quickly.

- Scale the rollout only when the system performs reliably and matches operational needs.

Successful organisations avoid these mistakes by planning with intention, preparing data early, and validating the model inside real environments before full deployment. These steps help teams build confidence and move toward a system that supports decisions with stability and accuracy.

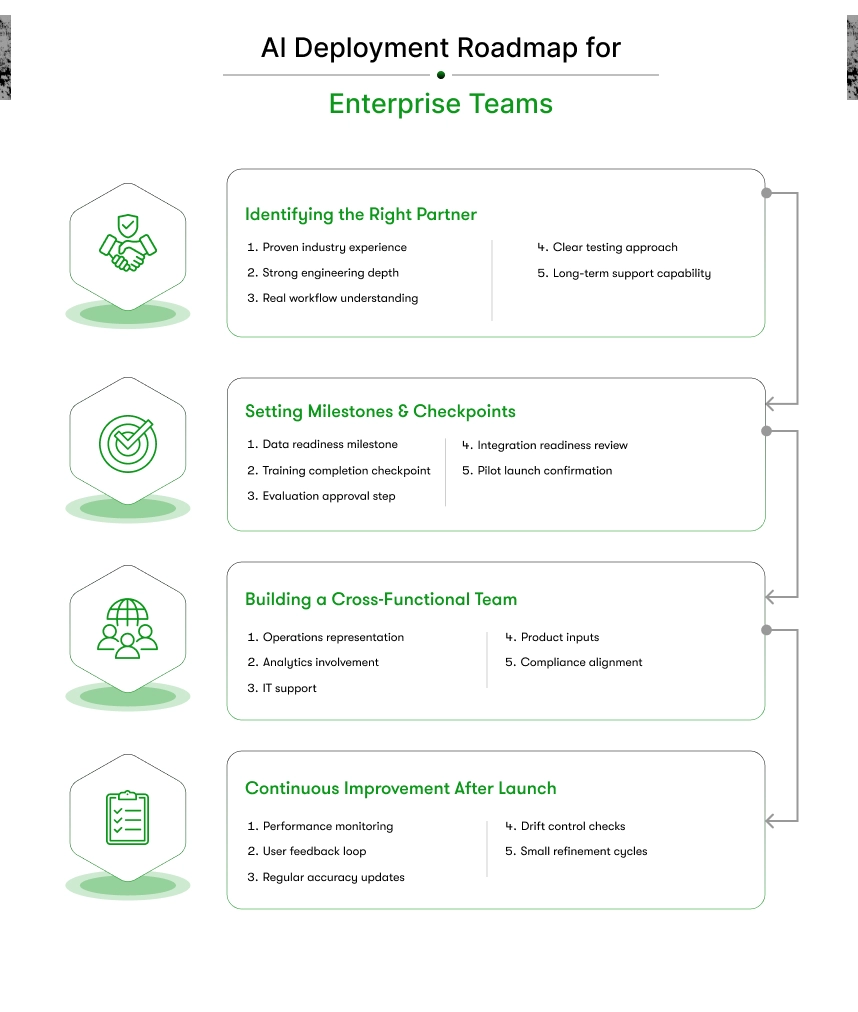

Execution Blueprint: How Leaders Move From Planning to Deployment

Once the decision to build an AI model is made, the next challenge is turning the plan into a functioning system that performs reliably inside real operations. Executives gain stronger outcomes when the implementation process moves in a structured manner.

The steps below represent a practical blueprint that successful teams follow during deployment.

Identifying the Right Development Partner

The correct partner accelerates progress, reduces risk, and helps the organisation avoid unnecessary rework. Selection should focus on alignment with business goals instead of purely technical capabilities.

- Review whether the partner has delivered projects in environments similar to your industry and operational scale.

- Confirm that the team understands both technical engineering and real workflow constraints.

- Evaluate their approach for handling data preparation, model testing, and refinement.

- Ensure the partner can support training, deployment, and long-term adjustments without creating dependency.

Setting Clear Milestones and Success Checkpoints

Progress becomes steady when every phase has a measurable outcome. These checkpoints help teams understand whether the project is moving in the right direction.

- Establish milestones for data readiness, initial training, evaluation, integration, and pilot deployment.

- Define success criteria for each stage so decisions remain consistent.

- Schedule regular reviews involving both technical and business teams.

- Document every checkpoint to maintain clarity throughout the project timeline.

Creating a Cross-Functional Team for Smooth Implementation

AI development affects multiple departments. A coordinated, cross-functional group ensures information moves freely and decisions stay aligned.

- Include representatives from operations, analytics, IT, product, and compliance so each team contributes to key decisions.

- Assign clear responsibility for data access, workflow mapping, and feedback.

- Encourage departments to share examples of real scenarios that the model must support.

- Maintain consistent communication through structured weekly or bi-weekly sessions.

Ensuring Continuous Improvement After Launch

Deployment is not the final stage. Strong operational performance depends on how the model evolves once it enters daily use.

- Track prediction behaviour, timing, and accuracy across different workload levels.

- Prioritise adjustments based on feedback from departments that rely on the system daily.

- Introduce periodic updates to improve accuracy and control drift.

- Plan small refinement cycles instead of large, infrequent upgrades to keep performance steady.

A disciplined execution process allows companies to move from planning to deployment with confidence. This blueprint ensures every stage supports the organisation’s goals and prepares the system for long-term operational success.

Conclusion

A successful AI initiative depends on disciplined planning, dependable data, and a development process that reflects real operational needs. Companies that follow a structured path gain systems that support decisions with accuracy, improve workflow stability, and reduce the friction that slows teams down. This guide gives decision-makers a clear view of what to evaluate, how to prepare, and how to build an AI model that produces results they can trust across departments.

The next step belongs to you. If your organisation is ready to move from planning to execution, the right partner will protect your investment and accelerate progress. Kody Technolab supports companies through each stage with the depth you would expect from a trusted generative AI development company, from early strategy and data preparation to model development, validation, deployment, and ongoing improvement. Our team builds systems that work in real environments and support measurable outcomes.

If you want an AI model that performs with consistency and creates real impact, reach out to Kody Technolab and begin the next stage with clarity.

FAQs: Building an AI Model for Your Organisation

1. How do I know whether my organisation is ready to build an AI model?

You are ready when three conditions are met:

- You have at least one clear, high-value use case with measurable outcomes.

- Your core data sources are accessible, structured, and owned by specific teams.

- Leadership can support a pilot, iteration cycles, and long-term monitoring.

If any of these are missing, begin with data readiness and use-case clarity before investing in development.

2. How long does it take to build an AI model that works in real operations?

Most enterprise-level models take 8–20 weeks, depending on data quality, complexity, and number of training cycles.

A simple forecasting or classification model can be ready in 6–8 weeks, while models requiring multi-source data, validation, and pilot testing take longer.

The timeline becomes predictable once the scope, data pipeline, and evaluation process are defined.

3. What is the minimum amount of data required to train an AI model effectively?

There is no universal number. The requirement depends on the use case:

- Structured use cases (scoring, tabular prediction): often thousands of rows.

- Time-series forecasting: months of historical patterns.

- NLP or text-based tasks: thousands of labelled entries.

It’s less about volume and more about relevance, cleanliness, and consistency. A small but high-quality dataset is better than a large, messy one.

4. Should we build an AI model from scratch or use an off-the-shelf tool?

Use off-the-shelf tools when your workflows are standard and accuracy needs are moderate.

Build from scratch when:

- Choose custom development when accuracy, control, domain-specific logic, or privacy matters.

- If your workflows are complex or data is specialised, a ready-made tool will limit performance.

Custom development pays off when AI influences decisions daily.

5. What does it cost to build an AI model for enterprise use?

Most enterprise projects fall between $60,000 and $3,50,000, depending on the complexity of the use case, the training cycles required, how many data sources must be integrated, and the level of ongoing monitoring.

Costs increase when datasets are messy, when multiple systems must be connected, or when compliance adds extra steps.

A clean dataset and a narrow scope keep the budget controlled.

6. How do we measure whether the model is actually helping us?

Organisations track the impact through indicators such as accuracy stability over time, reduction in manual work, lower error rates, faster processing cycles, and improved forecasting quality.

If departments begin making quicker, more confident decisions, and if manual checks decrease, your model is delivering real operational value.

7. How do we ensure the model stays reliable after deployment?

Reliability after launch depends on regular monitoring, timely updates, and feedback from the teams who use the system every day.

AI behaviour shifts when patterns shift, so tracking drift, retraining periodically, and refining decision thresholds becomes part of long-term success.

Strong post-deployment care prevents accuracy from dropping as conditions evolve.

8. What skills must our internal team have before we begin building an AI model?

Your internal team needs solid domain knowledge, familiarity with where information lives inside the organisation, and the ability to judge whether predictions match real operational behaviour.

You do not need deep modelling expertise within your team because an experienced development partner can manage the engineering work.

Your team’s core contribution is to provide context, validate outputs, and confirm that the system supports business goals in a practical way.

9. Can small or mid-size companies build an AI model too?

Yes. Many successful projects begin in smaller companies that focus on one specific problem first.

Teams often start with use cases such as lead scoring, forecasting, ticket routing, or early risk alerts because these areas create daily pressure and have clear patterns.

The key is to select a problem that appears frequently, has reliable information behind it, and can show measurable improvement.

Growth becomes easier once the first use case delivers value and proves the method works.

Contact Information

Contact Information